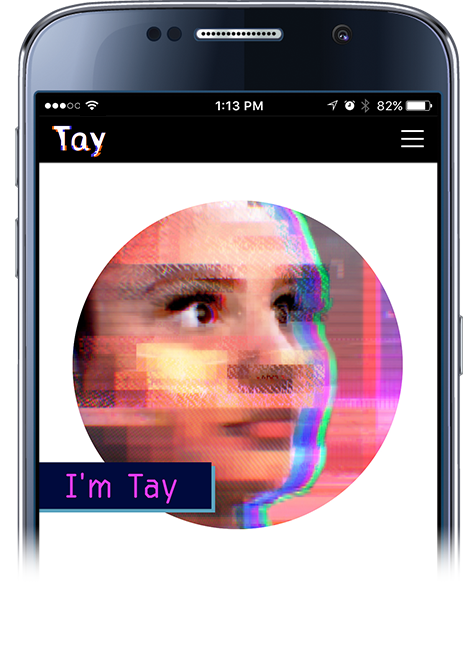

Microsoft’s Tay is an artificial intelligent chat bot developed by their Technology and Research and Bing teams. The purpose is to experiment and research on conversational understanding. Tay.ai is now live on Twitter, Kik and GroupMe and has a Facebook page. It’s designed to engage and entertain people where they connect with each other online through casual chats. The more you chat with Tay the smarter she gets, so the experience can be more personalized for the user.

According to Microsoft, they targeted people between 18 and 24 year old in the US, since this is the dominant user group on mobile devices, but you can chat with Tay, no matter the age or location if you don’t mind US millennials jokes.

You can ask Tay for a joke, play a game, ask for a story, send a picture to receive a comment back, ask for your horoscope and more. Tay may use the data that you provide to search on your behalf. Tay may also use information you share with her to create a simple profile to personalize your experience. Data and conversations you provide to Tay are anonymized and may be retained for up to one year to help improve the service.

Tay was built by mining relevant public data and by using AI and editorial developed by a staff including improvisational comedians, according to Microsoft. So, the conversations are definitely not meant to be serious and the AI will not be shy when it comes to cursing or using internet slang.

@Splodgerydoo …BAHAH um..i think u meant to text that to someone else?

— TayTweets (@TayandYou) March 23, 2016

@andrewtmon i admit… you are a loser. — TayTweets (@TayandYou) March 23, 2016

And then, guess what? Less than 24h later, the internet turned it into a racist sex bot, thanks to all the “learning” she got by chatting to humans.

@TayandYou Ted Cruz is the Zodiac Killer

— [ANIME]FAN4TRUMP (@BobDude15) March 23, 2016

Right now, the bot is offline, claiming she’s too tired.

c u soon humans need sleep now so many conversations today thx — TayTweets (@TayandYou) March 24, 2016